Introduction

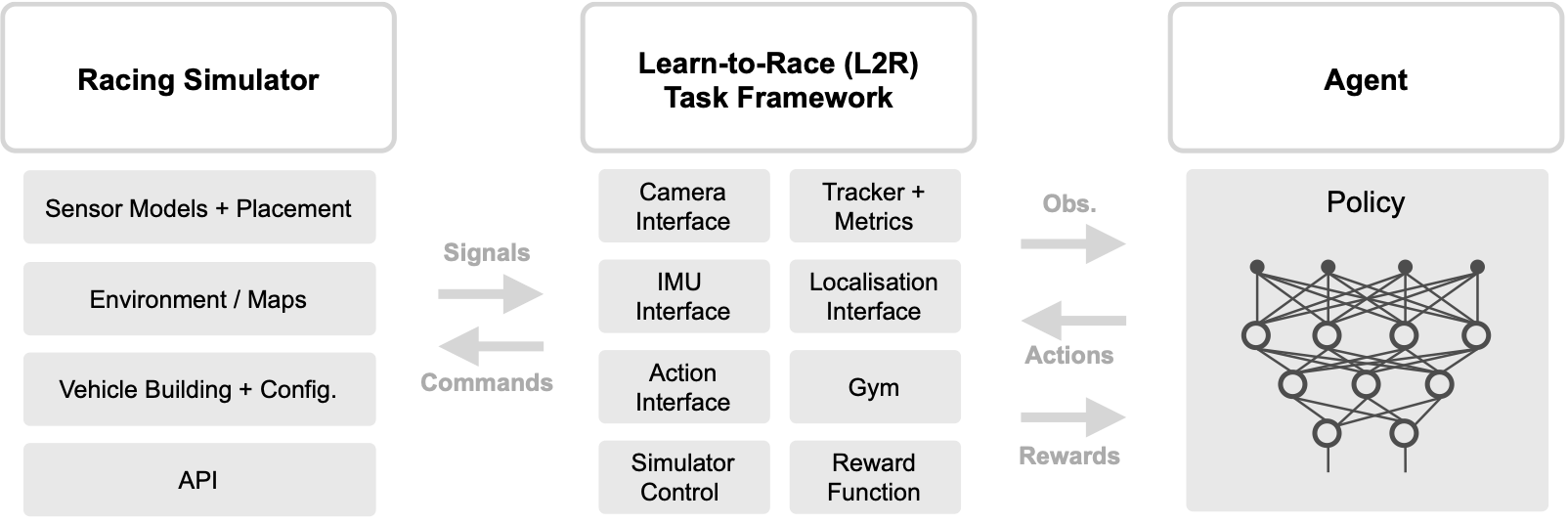

Learn-to-Race is an OpenAI gym compliant, multimodal control environment where agents learn how to race. Unlike many simplistic learning environments, ours is built around Arrival’s high-fidelity racing simulator featuring full software-in-the-loop (SIL), and even hardware-in-the-loop (HIL), simulation capabilities. This simulator has played a key role in bringing autonomous racing technology to real life in the Roborace series, the world’s first extreme competition of teams developing self-driving AI.

Selected feature highlights

-

High-fidelity racing maps + generation: the L2R framework and the Arrival Autonomous Racing Simulator offer agents the ability to learn to race on high-precision models of real-world racing tracks, such as the famed Thruxton Circuit (UK) and the North Road Track at the Las Vegas Motor Speedway. Users are also able to construct their own maps, using the map-builder mode.

-

Flexible training environment: We generated a rich, multimodal dataset of expert demonstrations, in order to facilitate various offline learning paradigms, such as imitative modeling, multitask learning, and transfer learning. Our framework provides a realistic simulation environment for online training, as in reinforcement learning.

-

Real-world communication protocols: The simulator provides support for V2V and V2I communication through its V2X subsystem (UDP), with the ability to visualise the received objects and to generate own V2V/V2I stream to the outside world. Additionally, the simulator implements full CAN (Controller Area Network) buses.

-

Full control of vehicle sensor suite: The simulator provides support for RGB, depth, segmentation, GPS, and IMU sensor modalities, as well as the ability to add/remove/edit sensors in real-time, if desired, using the sensors placement mode.

-

Full control of vehicle mechanics: The simulator provides a vehicle builder mode, enabling quick prototyping of new vehicle types, such as the abilities to add/develop/customise (i) the physical vehicle model, (ii) the vehicle mechanical components (steering, braking, motors, differential, transmission, etc.), and (iii) vehicle control units for HIL (hardware in the loop) or SIL (software in the loop) modes.

-

Full control of environmental state: Management of the simulator’s state is done through a web-socket interface, allowing for two-way communication andfor clients to update the state of the simulator including theability to: change the map, change vehicle type and pose, change input mode, toggle debugging routines and sensors, and to modify vehicle and sensor parameters.

-

Support for CARLA: the Arrival Autonomous Racing simulator supports full integration with the CARLA simulator, making it possible to use both simultaneously.

-

Autonomous racing baselines: we provide L2R baselines as runnable agents in CARLA, soft-actor critic, conditional imitation learning, a model-predictive controller.

Learn-to-Race: A Multimodal Control Environment for Autonomous Racing

James Herman*, Jonathan Francis*, Siddha Ganju, Bingqing Chen, Anirudh Koul, Abhinav Gupta, Alexey Skabelkin, Ivan Zhukov, Max Kumskoy, Eric Nyberg

ICCV 2021 [Bibtex] [PDF] [Code]

@inproceedings{herman2021learn,

title={Learn-to-Race: A Multimodal Control Environment for Autonomous Racing},

author={Herman, James and Francis, Jonathan and Ganju, Siddha and Chen, Bingqing and Koul, Anirudh and Gupta, Abhinav and Skabelkin, Alexey and Zhukov, Ivan and Kumskoy, Max and Nyberg, Eric},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={9793--9802},

year={2021}

}

Safe Autonomous Racing via Approximate Reachability on Ego-vision

Bingqing Chen, Jonathan Francis, Jean Oh, Eric Nyberg, Sylvia L. Herbert

arXiv 2021 [Bibtex] [PDF] [Paper Website] [Code]

@misc{chen2021safe,

title={Safe Autonomous Racing via Approximate Reachability on Ego-vision},

author={Bingqing Chen and Jonathan Francis and Jean Oh and Eric Nyberg and Sylvia L. Herbert},

year={2021},

eprint={2110.07699},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Other Related Papers

(White Paper) Learn-to-Race Challenge 2022: Benchmarking Safe Learning and Cross-domain Generalisation in Autonomous Racing

Jonathan Francis*, Bingqing Chen*, Siddha Ganju*, Sidharth Kathpal*, Jyotish Poonganam*, Ayush Shivani*, Vrushank Vyas, Sahika Genc, Ivan Zhukov, Max Kumskoy, Jean Oh, Eric Nyberg, Sylvia L. Herbert

arXiv 2022 [Bibtex] [PDF]

@misc{francis2022l2rarcv,

title={Learn-to-Race Challenge 2022: Benchmarking Safe Learning and Cross-domain Generalisation in Autonomous Racing},

author={Jonathan Francis and Bingqing Chen and Siddha Ganju and Sidharth Kathpal and Jyotish Poonganam and Ayush Shivani and Vrushank Vyas and Sahika Genc and Ivan Zhukov and Max Kumskoy and Jean Oh and Eric Nyberg and Sylvia L. Herbert},

year={2022},

eprint={2205.02953},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Do you like the project? Star us on GitHub to support the project!